GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection

GaLore: Memory-Efficient LLM Training

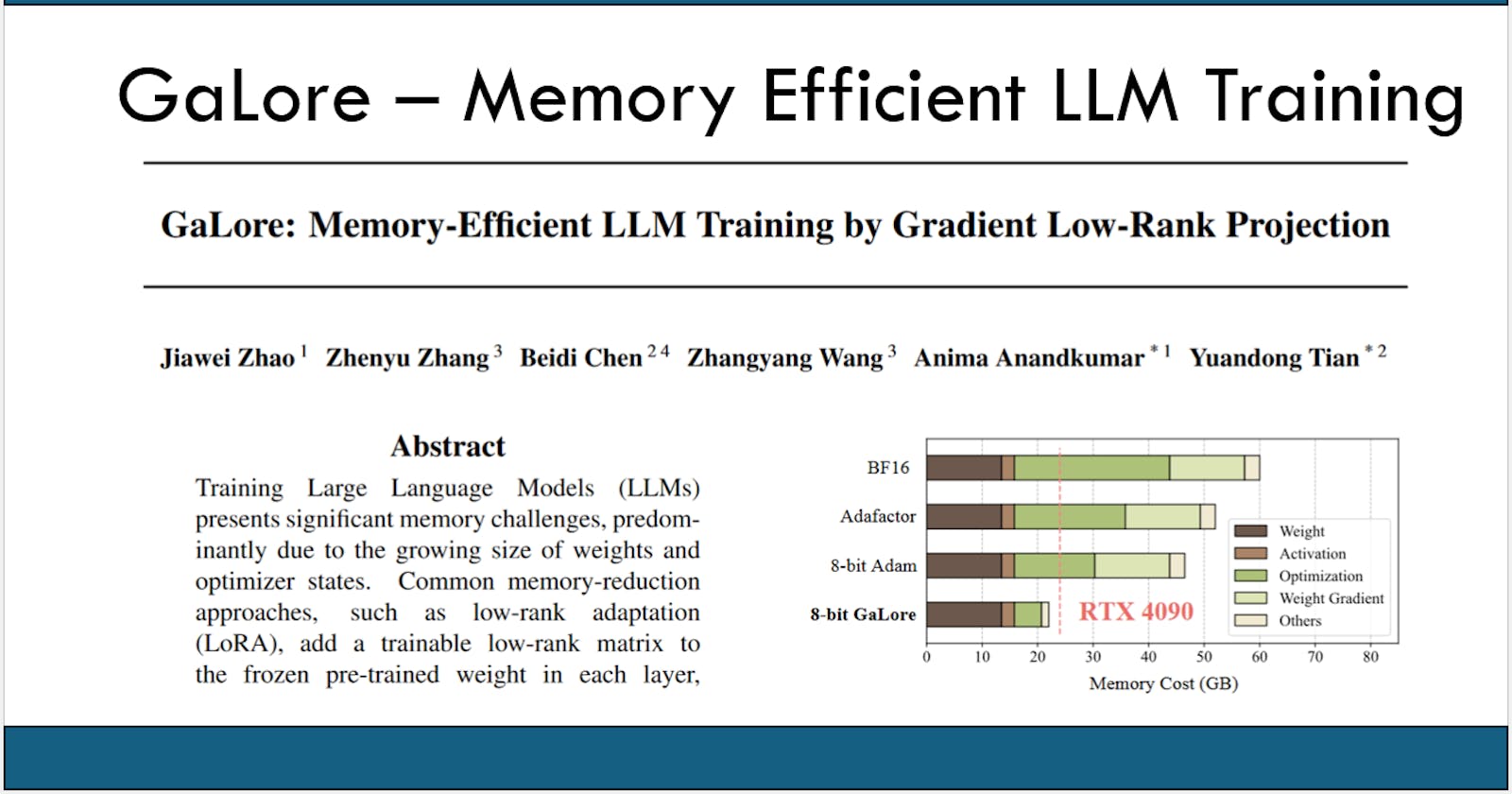

TLDR - Training Large Language Models (LLMs) presents significant memory challenges because of their large sizes. Approaches like LoRA typically underperform training with full-rank weights in both pre-training and fine-tuning stages since they limit the parameter search to a low-rank subspace and alter the training dynamics, and further, may require full-rank warm start. This paper introduces GaLore, a new memory-efficient LLM training method.

--> For video tutorials on top LLM papers, check Kalyan KS YouTube channel

--> For top LLM papers of the week, check the newsletter.

Core Challenge

Training increasingly large Language Models (LLMs) poses immense memory demands due to the massive quantity of weights (parameters defining the model) and optimizer states (data tracking how to update weights during training).

LoRA Drawbacks

Methods like Low-Rank Adaptation (LoRA) attempt to reduce memory needs. LoRA inserts trainable low-rank matrices into pre-trained models. While reducing parameters and optimizer states, this has several drawbacks:

Performance often suffers in pre-training and fine-tuning as the search for optimal parameters is constrained to a low-rank space, which changes how the model learns.

A full-rank initialization ("warm start") may still be needed.

GaLore Approach

Gradient Low-Rank Projection (GaLore) is introduced as a more memory-efficient training strategy. Here's its essence:

Full Learning: GaLore permits full-parameter learning, avoiding the limitations of low-rank constraints.

Memory Savings: It strategically reduces optimizer state memory by up to 65.5%.

Efficiency and Performance: GaLore achieves this memory reduction without compromising speed or model performance.

Experiment Results

Pre-training: GaLore successfully pre-trains LLaMA models (1B and 7B parameters) on consumer-level GPUs, a feat previously difficult due to memory constraints.

Fine-tuning: Performance on the GLUE benchmark (language understanding tasks) remains strong during RoBERTa model fine-tuning.

8-bit GaLore: Further optimizer memory reduction (up to 82.5%) is achieved, lowering overall training memory by 63.3%.

Key Takeaways

GaLore represents an advance in memory-efficient LLM training, allowing powerful models to be trained with reduced hardware requirements.

Unlike some methods, it doesn't sacrifice model performance to achieve memory savings.

--> For complete details, refer to the GaLore paper.