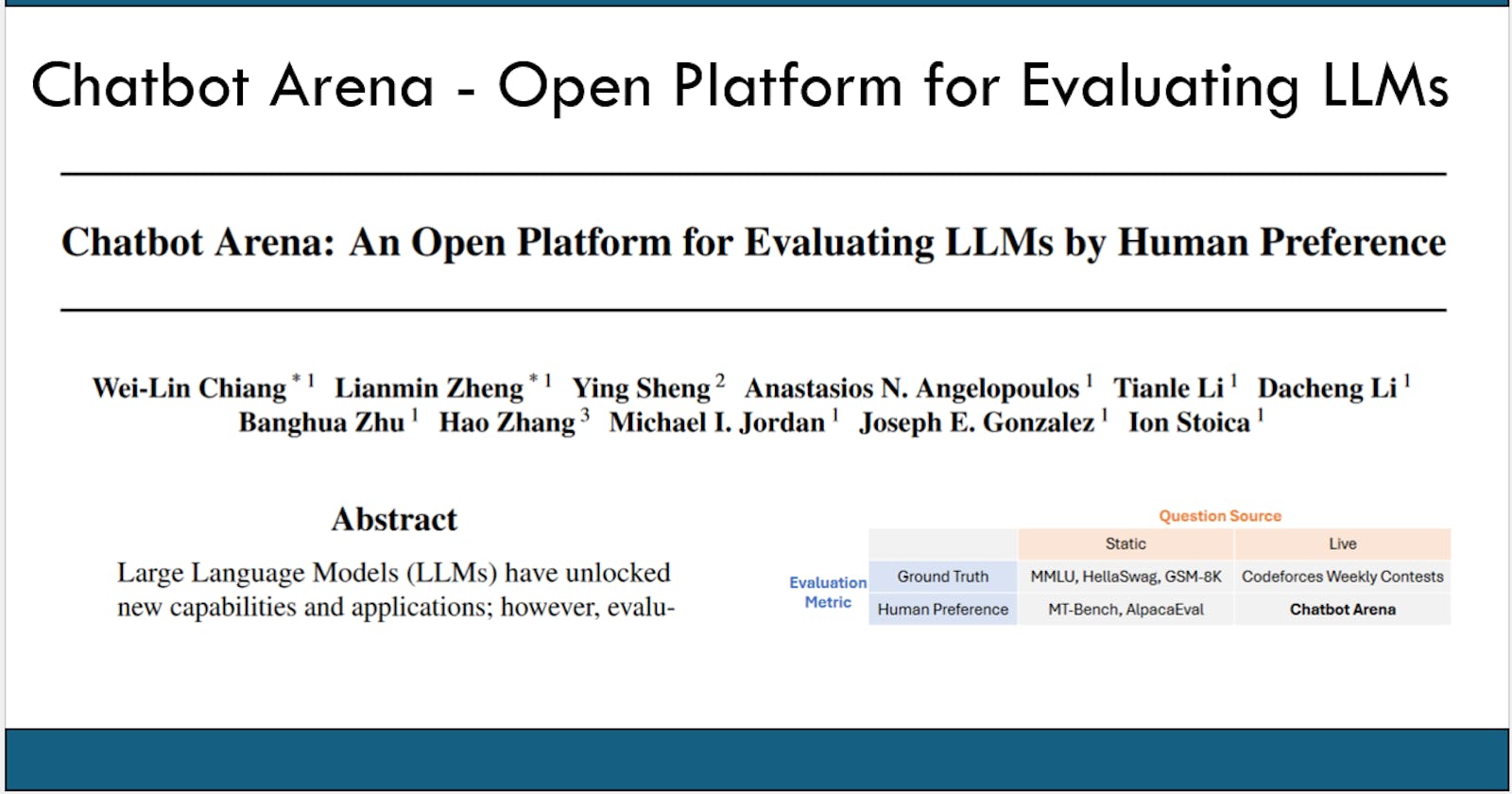

Chatbot Arena: An Open Platform for Evaluating LLMs by Human Preference

Chatbot Arena is a new open platform for evaluating LLMs

TLDR - Large Language Models (LLMs) offer new capabilities but evaluating their alignment with human preferences is difficult. Chatbot Arena is a new open platform introduced to specifically address this evaluation challenge. For evaluation, this platform uses a pairwise comparison method, gathering human preferences through crowdsourcing. The platform gathered over 240K votes and has been successful.

--> For video tutorials on top LLM papers, check Kalyan KS YouTube channel

--> For top LLM papers of the week, check the newsletter.

Recent Developments in Large Language Models

Large language models (LLMs) have rapidly advanced, demonstrating capabilities in a wide range of tasks.

These powerful models raise concerns about how to accurately evaluate their performance, especially when they're used in real-world situations where human preferences are crucial.

Current Limitations in Evaluating LLMs

Most existing LLM benchmarks have several significant limitations:

They often use closed-ended questions that don't reflect the flexibility needed for real-world interactions.

Datasets are static, leading to potential contamination and less reliable results.

Establishing an absolute "ground truth" for complex tasks is impractical or impossible.

The Need for Human-Preference Based Evaluation

To better address the capabilities of modern LLMs, an open evaluation platform is needed that:

Reflects real-world use with fresh, open-ended questions.

Prioritizes human preferences to assess how well models align with user needs.

Introducing Chatbot Arena

Chatbot Arena is a new benchmarking platform for LLMs that addresses these shortcomings.

Key features:

Crowdsourced model evaluation: Users ask questions and anonymously vote for their preferred response from two LLMs.

Live and diverse questions: Ensures the platform aligns with real use cases.

Statistical ranking models: Used to reliably determine LLM rankings based on the collected votes.

Chatbot Arena's Success and Contributions

Chatbot Arena has received significant adoption, collecting over 240K votes from around 90K users.

The diverse data provides insight into LLM use and has validated the platform's value to the industry.

Key contributions of this work:

The creation of the first large-scale, crowdsourced LLM evaluation platform.

Release of a dataset with over 100K pairwise LLM comparisons based on human preferences.

Development of efficient sampling algorithms to improve the accuracy of LLM rankings.

--> For complete details, refer to the Chatbot Arena paper.